About Spark

1. Introduction to Spark

Spark is a popular solution on the market, and overcomes most of the limitations of Hadoop MapReduce (results are written to disk resulting in longer execution times, and it is difficult to express complex operations with Hadoop MapReduce because it is limited and not very expressive).

Spark is able of interfacing with many data sources, as shown in the following:

For instance, it can interface with MySQL and PostgreSQL data, on Hadoop HDFS, there is also NoSQL, the injection with Kafka, the cached databases with Redis…

It is a universal tool that brings all the connectivity at the level of data recovery, then it allows to distribute the calculations in an efficient way: it tries to take advantage of all the existing resources, with the scalability that is taken into account when there are new resources available.

It has modules to do just about anything: SQL queries, machine learning algorithms, graph processing, stream processing (for instance in the case of data coming from sensors)…

2. Spark architecture

Just like MapReduce that doesn’t play well with large scale tasks, the main reason why Spark is useful is that it leverages task parallelisation on multiple workers, and it is runs faster, and is more efficiently managed.

The architecture of Spark is represented in the following figure.

Globally, what makes Spark work is the Spark Core which is the software part on top.

Just below there is the group of possibilities to run Spark (at the hardware level): either on your local machine (Spark standalone), or there is the possibility to manage this hardware part on a cluster using a cluster manager for resources allocation: there is YARN (Yet Another Resource Negotiator) introduced with Hadoop, MESOS which is an Apache project, and even Kubernetes…

And at the very bottom, there is the layer representing where the data is located.

Spark works with 4 different API: via Scala (native), Java, Python (PySpark), R. In terms of performance, Scala is about 10 times faster than Python.

3. Spark cluster, drivers and workers

Spark is based on a master-slave architecture (cf the following figure) i.e. the master coordinates the workers to execute the tasks simultaneously.

Before continuing, I would like to mention that driver, master, name node mean the same thing. It is like worker, slave and data node that are the same thing.

When Spark is used, it starts by communicating with the master machine, a Spark entry point to this machine is the SparkContext (or SparkSession). To import and launch a Spark session, you just need to write this line of code:

from pyspark.sql import SparkSession

spark = SparkSession.builder.getOrCreate()

In the back it communicates with a cluster manager. And it’s the latter that deals with the resource allocation to get the tasks done. It will choose which computations to allocate to which machines and at which quantities.

Within the cluster, there are workers that have a cache part to store data, and an execution part to perform tasks on data available on these nodes. Spark is therefore faster than Hadoop since Spark does the processing in the main memory of the worker nodes and prevents unnecessary operations with the disks.

4. Fault tolerance - High availability

Hadoop MapReduce is known for data storage on disks. Considering the fact that the Spark data is stored in a cache, we can ask ourselves the question of whether something is lost in terms of guarantee in case a node crashes or a job does not run. In fact, there are two mechanisms implemented in Spark to deal with failure: fault tolerance and high availability.

Fault tolerance is the ability for Spark to restart a job when the machine fails, it keeps track of the job that was running and is able to go back at a certain point, and not starting from scratch. It is able to restart the job and guarantee the result behind it.

High availability is the ability for a system to keep working after one or more components fail. If it’s a software part that crashes, the job is lost and it is re-run somewhere else, with a small delay to get the result.

In both cases, what happens is that the job is re-launched afterwards.

5. Spark mechanisms

Resilient Distributed Datasets

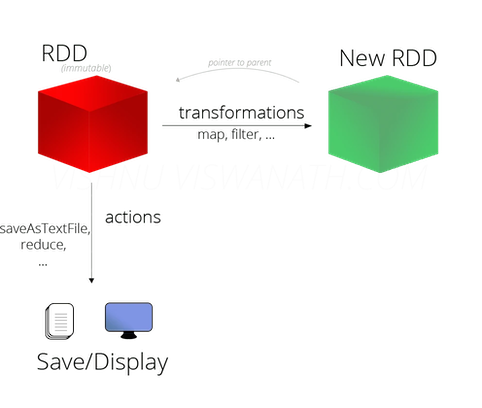

Regarding the big mechanisms on Spark, there are what called Resilient Distributed Datasets (RDD). These are partitioned collections of elements that are distributed on the different cluster nodes, it’s just how to have a large dataset (several TB for instance) and separate it on different nodes. This is the basis for manipulating data in Spark, now there is an abstraction on top which is the dataframe (similar to the one used in Pandas). RDD are inherent in Spark, they are immutable (not modifiable), they can contain different types of objects, they are fault tolerant, and they can be reused on multiple operations.

Lazy evaluation

It is possible to apply transformations to RDD (e.g. Map) to get a new RDD. It can also be applied actions, it may be “show me this”, and it is this part that the end user will see. This is all called lazy evaluation where there are transformations where nothing is executed, and actions where there is the sequence of transformations/actions that is executed.

Shuffling

It can happen that it is desired to have a better distribution of the data on the various nodes, one can make a manual shuffling to distribute the jobs, especially if one has many machines at disposal. Now, how to make shuffling happen ? Just with this line of code:

rdd = session.SparkContext.parallelize(0 until 1000)

where a session is defined and .parallelize() allows parallelization by default, and the initial object is present on all the cluster nodes.

Some useful transformations in Spark

-

.persist() to force to store an object into fast RAM memory (cache), at least on one node of the cluster.

-

.broadcast() allows to force caching a dataset, for instance when one element is present on all the cluster nodes and it is needed to do a job or a calculation (for instance the weights to be trained for a machine learning model)

-

.getNumPartitions: it is not a transformation but it gives the number of partitions

-

.mapPartitions() allows to distribute the object on all the cluster nodes in an equitable way.

-

.repartition() to define a number of partitions, for example when the number of partitions defined by a RDD creator is not the one we want.

-

.filter() to filter objects using conditions

Leave a comment